Looker Studio makes it easy to build visually appealing dashboards with dynamic data sources, which helps you put meaningful data insights into the right hands in order to understand and run an efficient, effective business. To all of the Looker Studio power users out there building those great looking dashboards, you are keenly aware of how important those data sources are.

You may have heard that not all data sources are created equal, but what does that mean? Well, there are multiple ways to populate dynamic data into a Looker Studio dashboard. One of the most common ways is to simply connect to a Google Analytics data source, such as GA4. This is probably the easiest path to grabbing GA4 data to use as your data source. However, there are additional approaches that unlock more flexibility around data use cases, as well as removing limitations.

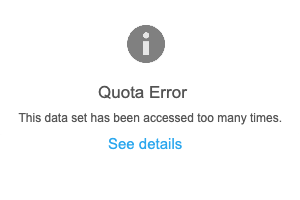

What limitations, you ask? Quotas are a potential obstacle when connecting your Looker report directly to GA4. Each API request consumes tokens by property and project, per hour. If you exceed a certain threshold, you will receive an error message and have to wait about an hour before you can continue working with your Looker Studio dashboard. The quota is consumed at a faster rate as the data becomes more complex, such as large datasets with many rows and columns, heavy filtering, and long durations.

This is what the error message that replaces your Looker Studio data looks like:

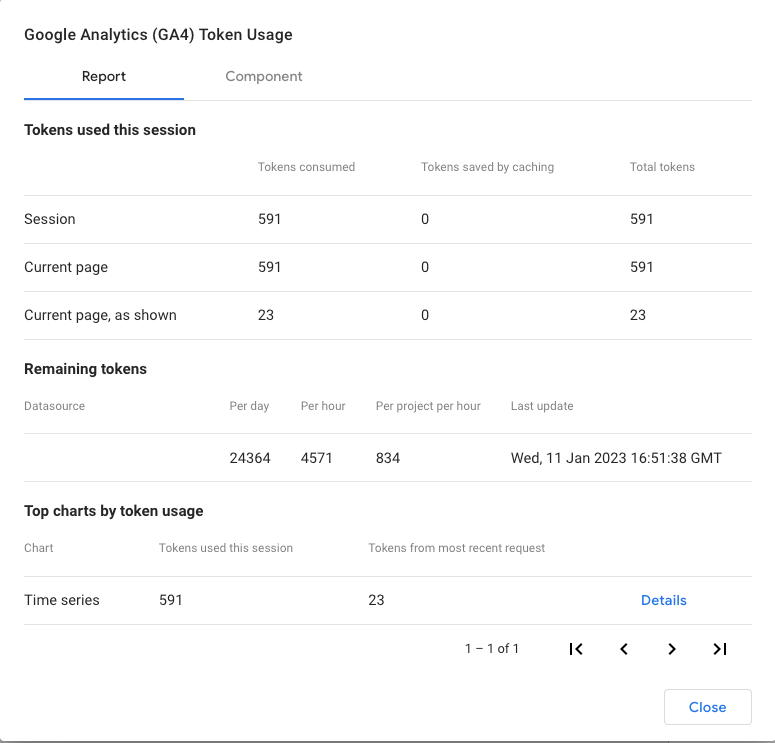

Google has mapped out different types of quotas that are separated into three categories: core, realtime, and funnel. However, Looker keeps tabs on your quota use, and you can access that information directly in the UI. To view the status of your quota use, follow these steps:

- Right click on any graphic in your dashboard and select "Google Analytics token usage" from the menu

- In the pop-up window, click on "Report"

- The Token Usage report will display how many tokens you have left to use

Okay, so what's an alternative approach rather than connecting directly to GA4? You can actually leverage BigQuery to create your data sources (tables). We recommend deploying an Extract, Transform, and Load (ETL) application like Launchpad, which transfers data from GA4 to BigQuery as your data warehouse. ETL applications automate the transfer process for you, meaning you don’t need any knowledge of the code in order to set one up. Perhaps more importantly, by transferring your data to a warehouse first, you’ll have full control over your data and you’ll start building historical records that can guide future business decisions.

BigQuery does have a native GA4 data stream, but it is only forward-looking, so any past GA4 data would not be accessible unless you leverage an ETL. The GA4 BigQuery native stream also drops in nested records, which means that transformation work (SQL) is required to normalize the data. An ETL application works for both past and present GA4 data, as well as transfers flattened records, which greatly reduces the amount of SQL work required to make the data usable. Finally, thinking about the bigger picture, an ETL transfers data from a variety of preconfigured sources, such as Facebook, Bing, Linkedin, and many more, making it easy to house all of your data in one place – not just your GA4 data.

Easy Steps to Leverage an ETL and BigQuery for Looker Studio Reports

Connecting these different platforms and automating the work is much easier than you think, and it's all part of creating an effective data pipeline. After a few easy steps, you'll be able to sit back and enjoy accurate, up-to-date records whenever you need them.

- Connect to your data via an ETL

- Transfer your data to Google BigQuery

- Connect Looker Studio to your data (table) in BigQuery

- Connect your charts in Looker Studio to the new data source

See more on how to automate your reports with Launchpad, Google BigQuery, and Looker Studio

Try It for Free

One of the best things about adding Launchpad to your data pipeline is that you can do it for free. Launchpad offers a free 14-day trial, which includes access to two data sources, unlimited destinations, and up to 100,000 records. That should be more than enough for you to get your data stack in shape and start seeing the benefits of unified data.